How the Cloud Improves Transparency at the State Department, USAID and NOAA

The ability to collect, review and distribute field data is critical to the U.S. Agency for International Development’s mission of promoting democracies worldwide.

But much of USAID’s raw data originates in some of the most remote places in the world. In the past, that’s left the agency’s workers with no standardized way to transmit and access this crucial information. Even more troublesome, historical data that might be useful for comparative and analytical purposes often resides in multiple, partner-created databases that can’t be easily accessed or shared across the enterprise.

To solve this problem — and in its own small way, help further democracy — USAID turned to the cloud.

For the past two years, the agency’s Chief Data Officer Brandon Pustejovsky and his team have collected open data via a centralized website, known as the Development Data Library (DDL). Now, his team is migrating that information to a highly secure but public cloud.

SIGN UP: Get more news from the FedTech newsletter in your inbox every two weeks!

Transparency and access to information are key tenets of democratic governments. Today, agencies accomplish those goals via the cloud.

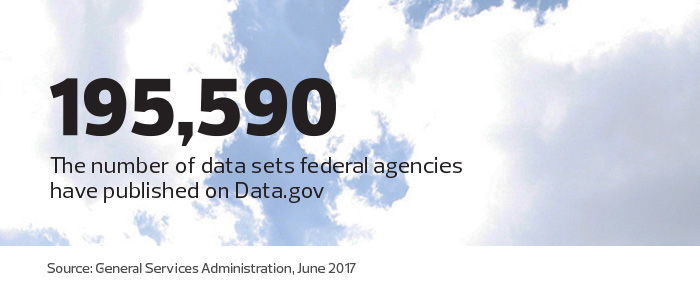

The Obama administration fortified that standard in 2009 when it directed agencies to begin publishing high-value “open” data sets online. Since then, agencies have accelerated their open-government efforts to boost civic efforts and improve the work of their employees. Today, nearly 200,000 government-owned data sets are available for download at the Data.gov website.

At USAID, cloud computing will provide an easy, automated way to include details on raw data sets. The agency’s goal is to move the full DDL into the cloud in 2018. IT officials would then begin connecting other portals and legacy data sources.

At the same time, users also will be able to create their own visualizations on specific data sets.

“Data is really only valuable in context,” Pustejovsky says. “When a submission comes in to the cloud, we’ll not only have the file, but we’ll also have the metadata that allows us to fully describe it when we push it back out. Ultimately that gives the end user an ability to interact with the data that’s much more informed by context.”

Agencies Achieve Transparency Through Technology

Joshua New, a policy analyst at the Center for Data Innovation, says open data is best thought of as “machine-readable information that is freely available online in a nonproprietary format and has an open license, so anyone can use it for commercial or other use without attribution.” In 2013, former President Barack Obama issued an executive order declaring this type of data the default delivery model.

“I don’t think we could expect agencies to provide open data as we know it today without cloud services,” New says. “It’s just not feasible to publish these enormous data sets by trying to build your own infrastructure. You really need the kind of on-demand, scalable storage, agility and manageability that the cloud provides.”

Agencies have moved their data to the cloud more slowly than many hoped, he says, but the pace is accelerating.

The State Department, for example, is evaluating options to move its data sets — on topics from historical visa issuance to geographical information depicting the Ebola virus outbreak in West Africa — onto a mix of private and public cloud platforms.

“When we look at the specific transparency, the public participation and the collaboration aspects of open government, the cloud is ideally suited to fulfilling both the letter and the spirit of open government and open data,” says Karen Mummaw, the State Department’s deputy CIO for business, management and planning. “There is a long line of people in this agency who want to put their open data into the cloud.”

Keep Data Clean to Protect Privacy

Plenty of challenges remain, from business processes to data formatting, in transferring open data to cloud platforms, Pustejovsky says.

When agencies move to a unified environment, for example, they must apply the rules of that environment to data sources across the board, a task that can be difficult for an organization with a broad international footprint such as USAID. Agencies also must normalize data so users of varying sophistication levels can access the information.

Working carefully with data up front helps guard against the “mosaic effect,” the idea that by combining seemingly innocuous individual pieces of data users can piece together information that would constitute a privacy or security risk. Putting data in the cloud, along with its agile and predictive analytical tools, can increase that risk.

For Mummaw, a stringent review process minimizes the likelihood of the mosaic effect. Pustejovsky says USAID’s open-data policy requires partners and other data collectors to extricate personally identifiable information when sending data files.

“We don’t want to assume necessarily that the data we receive has been completely cleaned and de-identified,” he explains. “So we also have a five-step internal review that covers security, privacy, records management, legal and overall risk to make sure we’re comfortable with everything we make available to the public.”

Private-Sector Cloud Partners Keep Data Flowing

Publishing an agency’s full repository to the cloud can be prohibitive, New says. In addition, agencies often don’t have the pipeline needed for users to download public data. Consider the National Oceanic and Atmospheric Administration (NOAA), which collects 10 to 15 terabytes of data each day, almost all of which is in huge demand.

NOAA recently took steps to solve this by setting up research agreements with five major cloud providers, including IBM and Microsoft, to build the infrastructure needed to house NOAA’s open data sets at no cost. Ed Kearns, NOAA’s chief data officer, says the effort is already yielding results.

“With the level of service they offer, the time it takes for the user to access the data is now almost instantaneous,” Kearns explains. “As a result, we’ve seen the utilization of the data more than double, compared with what we were able to provide in the past through our traditional distribution vehicles.”

New notes other agencies should watch the NOAA collaboration, as it might become the standard for how agencies distribute open-data resources.

“It’s a win-win, because those providers want to build products with this data and they have customers who want that data,” says New. “That they would be willing to do that for free is fascinating because it shows how important the data is and also how valuable it is.