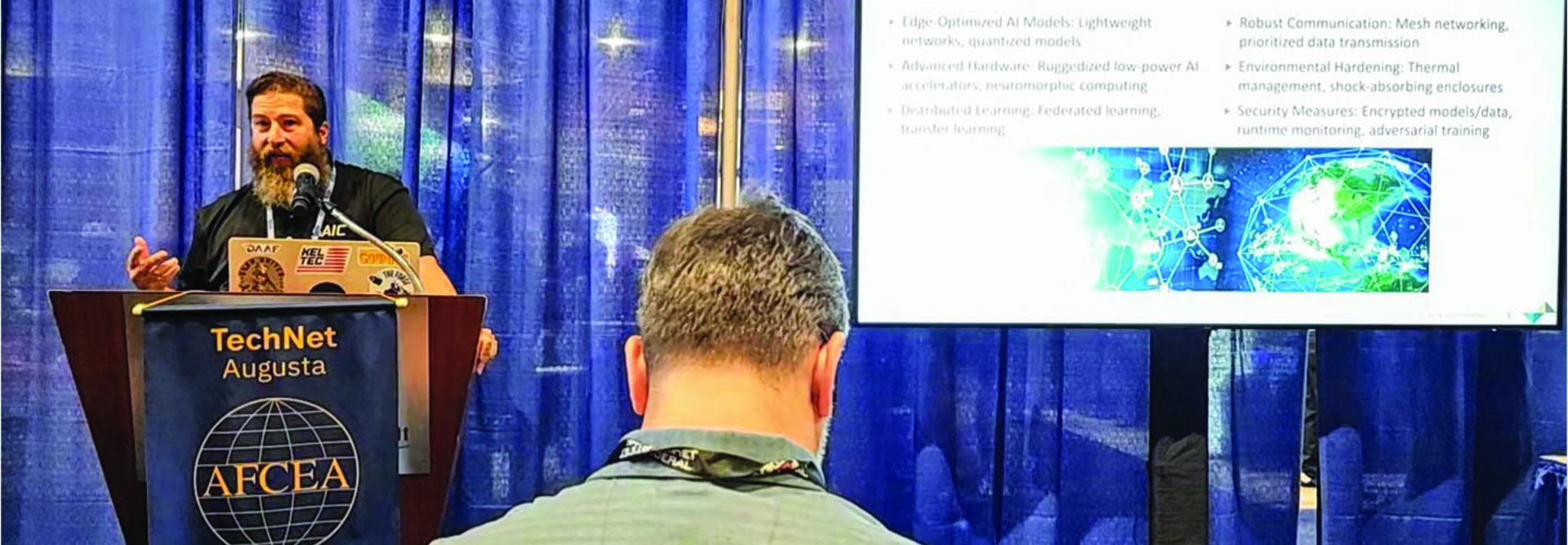

Mitigating Environmental and Security Threats at the Tactical Edge

Edge optimization of AI models takes those that are “heavy,” in terms of compute and processing, and make them more “lightweight” by, say, compressing neural networks. Calculations are cut off once a certain confidence level is reached, which still ensures high accuracy but at four times the processing.

Distributed federated learning is another mitigation method, where models retrain themselves only after edge nodes reconnect and start sharing data again.

A robust mesh network can prioritize and transmit messages AI is inferencing using chain-of-thought learning with generative AI to optimally route the transmission, based on the payload and metadata around it, Meil said.

On the security side of the equation, encryption can be applied to data transmissions and blockchain employed to verify that sensor data is intact.

Models can be hardened by training them with adversarial learning to recognize when they’re being exposed to harmful influences. SAIC has a computer vision model that examines satellite images of ships, and it’s been hardened against distorted images by exposing it to those where orientation and size have been altered or noise added.

LEARN MORE: Agencies looking to benefit from AI need to lay the groundwork.

Having a process for data quality checks, which verify that sensors are sending the right data in the right format before soldiers are alerted, is a best practice, Meil said.

By far the biggest challenge to AI adoption in DDIL environments is training the “human element” to operate and trust models through data science literacy, Meil said.

“Being able to invert and essentially understand, back to raw data, how the system is making decisions increases the trust levels,” he said. “Increasing the trust level increases adoption, and then adopting it is going to help achieve the mission.”

SAIC developed an interface that allows soldiers to point and click or drag and drop to explore data with built-in models that generate visuals to explain what is happening. The company is working with the Navy, Marine Corps and combat commands to put that capability in the hands of mission owners.

“We’ve actually found that the inference gets better, the models sometimes get better and they’re faster at learning these things because they understand the mission better than the data scientists, the data engineers and the software engineers,” Meil said.