Computer Vision: How Feds Can Use AI to Advance Beyond Image Processing

At Fort Belvoir in Springfield, Va., less than 20 miles from downtown Washington, D.C., sits the massive headquarters of the National Geospatial-Intelligence Agency, where intelligence analysts pore over satellite images of everything from natural disasters to missile equipment and the movements of terrorist networks.

NGA analysts at the 2.3 million-square-foot headquarters collect and examine geospatial intelligence and distribute data to the Defense Department and national security community. And in the not-too-distant future, they may have a lot more help from computers.

NGA is one of the federal agencies that is most interested in computer vision technology. Director Robert Cardillo has said on the record several times that he hopes to use the technology to augment (not replace) human analysts. The goal is to use the artificial intelligence that underpins computer vision to automate certain kinds of image analysis, which will free up analysts to perform higher-level work.

Computer vision is more sophisticated than traditional image processing, but is still a relatively nascent technology, certainly within the federal government. Although promoted by tech giants, such as Google and Microsoft, the government is still in the exploratory stages of adopting computer vision. However, the technology has the potential to reshape how certain agencies achieve their missions, especially those that involve object detection and threat analysis.

In May, the White House convened a summit on AI and established a Select Committee on Artificial Intelligence under the National Science and Technology Council. The committee will advise the White House on interagency AI R&D priorities; consider the creation of federal partnerships with industry and academia; establish structures to improve government planning and coordination of AI R&D; and identify opportunities to leverage federal data and computational resources to support our national AI R&D ecosystem.

Notably, the federal AI activities the committee is charged with coordinating include “those related to autonomous systems, biometric identification, computer vision, human-computer interactions, machine learning, natural language processing, and robotics,” according to the AI summit’s summary report.

Computer vision is clearly on the minds of many across the government. But what is the technology, how is it different than image processing and how might federal agencies use it to advance their missions?

SIGN UP: Get more news from the FedTech newsletter in your inbox every two weeks!

What Is Computer Vision Technology?

Computer vision is not one technology, but several that are combined to form a new kind of tool. In the end, it is a method for acquiring, processing and analyzing images, and can automate, through machine learning techniques, what human visual analysis can perform.

One way to imagine computer vision technology, industry analysts say, is as a stool with three legs: sensing hardware, software (algorithms, specifically) and the data sets they produce when combined.

First, there is the hardware: camera sensors that acquire images. These can be on surveillance cameras or satellites and other monitoring systems. “The majority of use cases that are out there incorporate looking at video,” says Carrie Solinger, a senior research analyst for IDC’s cognitive/artificial intelligence systems and content analytics research.

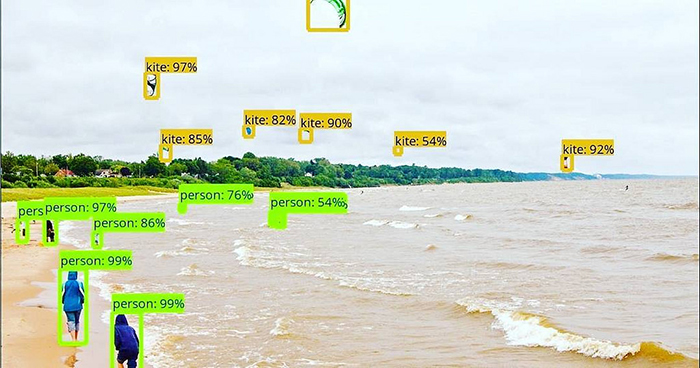

Computer vision technology can aid agencies in object detection. Photo: ShashiBellamkonda, Flickr/Creative Commons

However, Solinger says, the analysis is often retrospective as opposed to real-time streaming because the software to perform that kind of analysis is not mature enough yet.

The computing power required to analyze images has advanced significantly, but Solinger contends the algorithms haven’t quite caught up. However, she notes that Google, Microsoft and other tech companies are trying to build such algorithms to process images in real time or near real time.

Werner Goertz, a Gartner analyst who covers AI, says that algorithms continue to exploit improving camera and sensor technology. “The data sets are a result of the commoditization of cameras, the corresponding improvements algorithmically,” he says. “All of this leads to more and more data sets.”

The more computer vision systems are deployed, Goertz notes, the more opportunity exists to create, define and exploit the data sets they create. Large technology companies “are all recognizing that we are on the threshold where this stuff can scale, can be become affordable and can become a disruptive and major part of all of our lives, because it’s going to affect us all in one shape or form.”

Computer Vision vs. Image Processing: Understand the Difference

What separates computer vision from image processing? Image processing works off of rules-based engines, Goertz notes. For example, one can apply rules to a digital image to highlight certain colors or aspects of the image. Those rules generate a final image.

Computer vision, on the other hand, is fueled by machine learning algorithms and AI principles. Rules do not govern the outcome of the image analysis — machine learning does. And with each processing of an image by the algorithms that underpin computer vision platforms, the computer refines its techniques and improves. This, Goertz notes, means that computer vision results “in a higher and higher probability of a correct interpretation the more times you use it.”

Solinger adds that the major difference between computer vision and image processing is that image processing is actually a step in a computer vision process. The main difference, she says, is “the methods, not the goals.”

Computer vision encompasses hardware and software. Image processing tools look at images and pull out metadata, and then allow users to make changes to the images and render them how they want. Computer vision uses image processing, and then uses algorithms to generate data for computer vision use, Solinger says.

How Are Computer Vision Algorithms Used?

The most sophisticated computer vision algorithms are based on a kind of artificial intelligence known as a convolutional neural network. A CNN is a type of artificial neural network, and is most often used to analyze visual imagery.

What computer vision algorithms bring to the table, Goertz contends, is the scalability and ability to memorize outcomes. “We no longer need to capture and store large amounts of video data,” he says. Instead, computer systems can store the fact that a person was present at a certain location at a certain time and wandered from point A to point B.

Computer vision can automate the process, extract that metadata about an image video and then store the metadata without the image having to be stored. “We’ll have to then think about where it’s desirable for this information to be captured,” he says.

There are still biases that affect computer vision systems, especially for facial recognition, Solinger says. Many are able to identify white males without about 90 percent accuracy, but falter when trying to recognize women, or people of other races. Computer vision systems are obviously not totally accurate Indeed, last week, Amazon’s facial recognition tools “incorrectly identified Rep. John Lewis (D-Ga.) and 27 other members of Congress as people arrested for a crime during a test commissioned by the American Civil Liberties Union of Northern California,” the Washington Post reports.

Computer vision can be used not just for facial recognition but for object detection, Solinger says. Computer vision can explore the emotion and intent of individuals. For example, if surveillance cameras capture footage of an individual who is in a database of those considered by law enforcement to be a threat, and that person is walking toward a federal building, computer vision tools could analyze the person’s gait and determine that they are leaning heavily on one side, which could mean the individual is carrying a bomb or other dangerous object, she says.

NGA, TSA Interested in Using Computer Vision to Enhance Missions

NGA Director Robert Cardillo has been a proponent of computer vision. In June 2017, at a conference in San Francisco hosted by the United States Geospatial Intelligence Foundation, Cardillo said that at some point computers may perform 75 percent of the tasks NGA analysts currently do, according to a Foreign Policy report.

At the time, Cardillo said the NGA workforce was “skeptical,” if not “cynical” or “downright mad,” about the idea of computer vision technology becoming more of a presence in analysts’ work and potentially replacing them.

According to Foreign Policy, Cardillo said he sees AI as a “transforming opportunity for the profession” and is trying to show analysts that the technology is “not all smoke and mirrors.” The message Cardillo wants to get across is that computer vision “isn’t to get rid of you — it’s there to elevate you. … It’s about giving you a higher-level role to do the harder things.”

Cardillo wants machine learning to help analysts study the vast amounts of imagery of the Earth’s surface. Foreign Policy reports:

Instead of analysts staring at millions of images of coastlines and beachfronts, computers could digitally pore over images, calculating baselines for elevation and other features of the landscape. NGA’s goal is to establish a “pattern of life” for the surfaces of the Earth to be able to detect when that pattern changes, rather than looking for specific people or objects.

And in September, Cardillo said the NGA was in talks with Congress to swap years’ worth of historical data the agency has for computer vision technology from private industry. Such a “public-private partnership” could help the agency in overcoming its challenges to deploying AI, Federal News Radio reports.

An interior view of the atrium of the National Geospatial-Intelligence Agency Campus East. Photo: Marc Barnes, Flickr/Creative Commons

“The proposition is, we have labeled data sets that are decades old that we know have value for those that are pursuing artificial intelligence, computer vision, algorithmic development to automate some of the interpretation that was done strictly by humans in my era of being an analyst,” he said at an intelligence conference hosted by Georgetown University, according to Federal News Radio. “And so that partnership is one that we’re discussing with the Hill now to make sure we can do it fairly and openly.”

Solinger notes that computer vision can help agencies categorize and analyze images, because manually coding everything can be extremely time-consuming and expensive. “The federal government is sitting on tons and tons and tons of image data, and they have all of this historical data that they could process that can help them understand different security concerns or learn from past mistakes,” she says.

Meanwhile, in May, the Transportation Security Administration and Department of Homeland Security’s Science and Technology Directorate released a solicitation for new and innovative technologies to enhance security screening at airports.

The solicitation, under S&T’s Silicon Valley Innovation Program, is called “Object Recognition and Adaptive Algorithms in Passenger Property Screening.” TSA says its goal is to “automate the detection decision for all threat items to the greatest extent possible through the application of artificial intelligence techniques.”

Analysts say that this can be done but will require TSA to take other measures. “To detect nefarious objects or materials in luggage or even on persons, it will probably take more than traditional camera sensors within the spectrum of light that humans can capture,” Goertz says.

Solinger says TSA will need to feed real-time 3D videos into a computer vision system for it to accurately detect threats in luggage or cargo. “You can’t look at a flat image,” she says, adding that “it is going to be prudent to have that information in real time.”

And in June, the DHS S&T unit said it is “looking to equip drones with different sensors useful in search-and-rescue, reconnaissance, active shooter response, hostage rescue situations, and a myriad of border security scenarios.” Throughout 2018, S&T will be selecting commercially available sensors and will demonstrate them at Camp Shelby in Mississippi, according to the agency. Notably, DHS says that on land, “some drones may need to be able to use a variety of computer-vision enhancements and navigational tools as well as specialized deployment methods (i.e.: truck-mounted, back-packable, tethered, etc.).”